Bubbles - Interactive Mirror

Spring 2025

Tools:

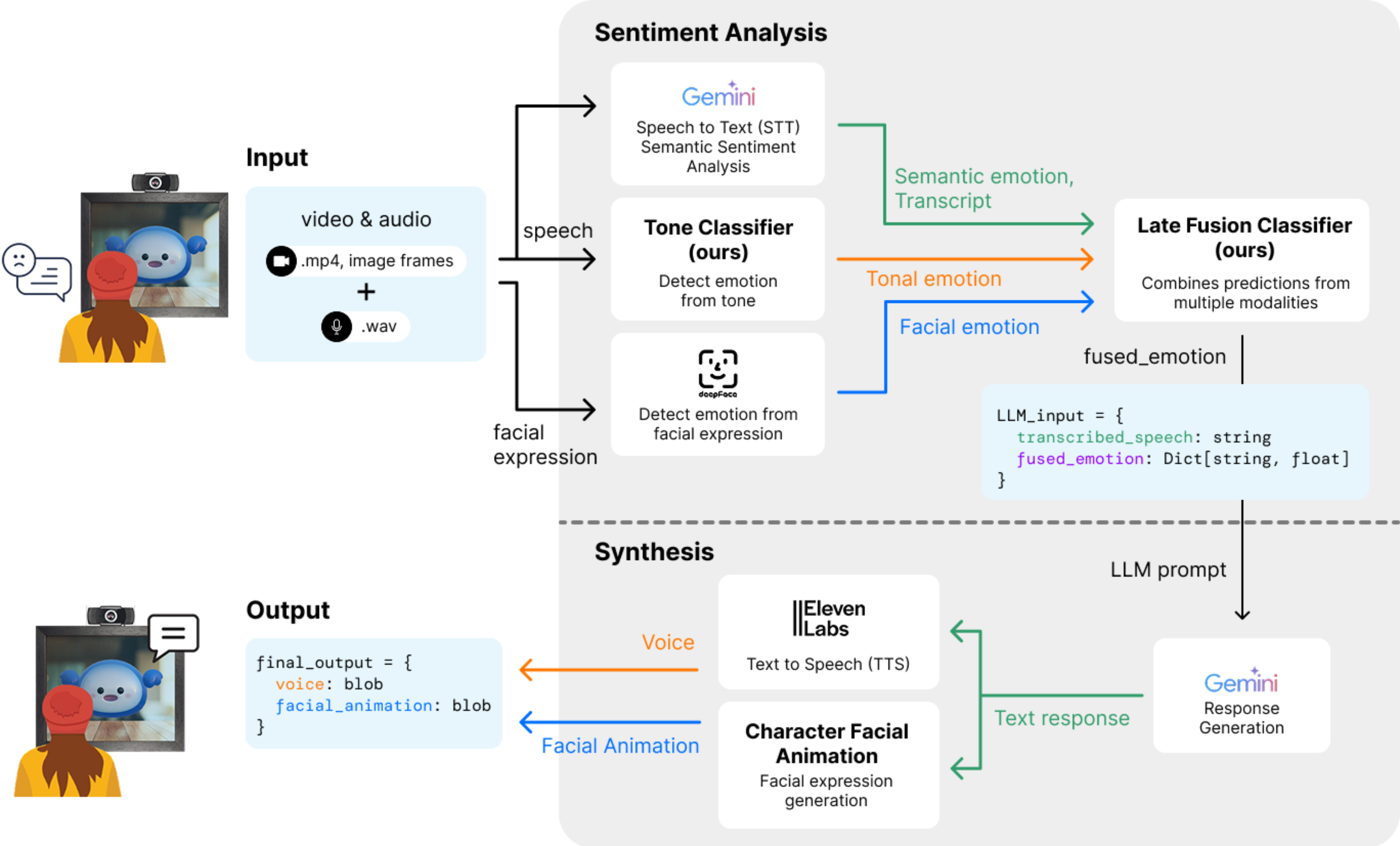

Bubbles is a real-time interactive mirror that analyzes the user’s emotional state

through three modalities: facial expression,

vocal tone, and speech content. The mirror is

equipped with a webcam and microphone to capture

the user’s face and voice in real time. The system recognizes

and generates responses based on the 6 basic emotions:

happiness, sadness, anger, fear, disgust, and neutral. It uses

DeepFace for facial expression detection and

Gemini for speech transcription and

semantic emotion recognition. To understand the user's tone, I developed a custom

MLP tone classifier using Wav2Vec2 embeddings

extracted from RAVDESS, CREMA-D, and our team’s

own recordings. Then, the outputs from the three classifiers are

combined using a late fusion algorithm based on

Dempster-Shafer Theory to find the user’s

most likely emotion even under uncertainty. At the end, a personalized response is

generated via Gemini and then converted into

emotionally expressive speech using ElevenLabs.

The response is displayed by an animated 3D character named “Bubbles”

with matching facial expressions.

My main contributions to this project was building and training the tone classifier and

creating the facial expression generation pipeline in

Blender based on facial action unit research.

For more information please take a look at the project video, our GitHub repository and the picture gallery.